Model Context Protocol (MCP): The Key to Faster, Simpler AI App Development with Fine

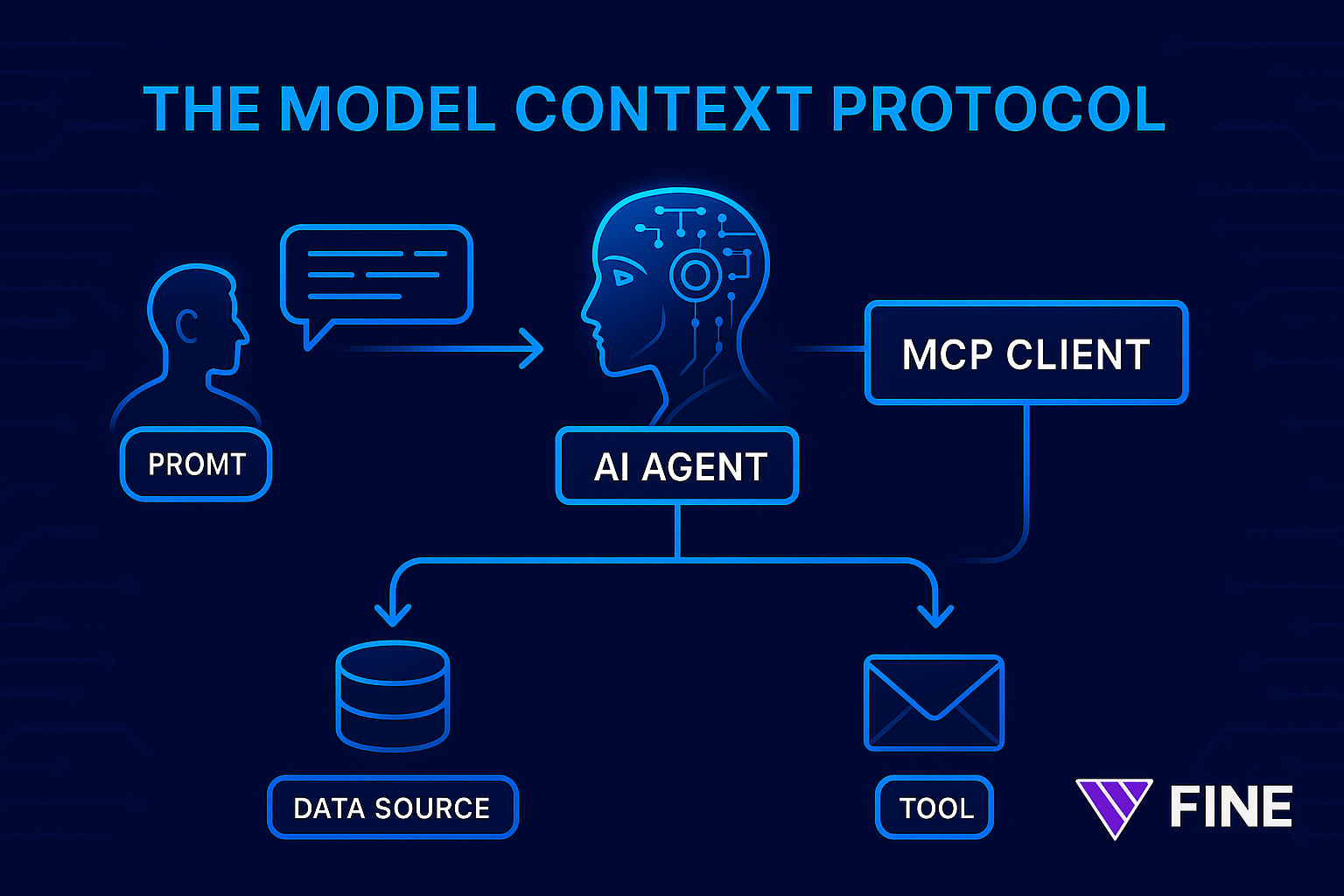

Building AI-powered applications can feel like assembling a jigsaw puzzle. You need to wrangle large language models (LLMs), connect to databases and APIs, manage your app’s context window (the limited “memory” of what the model can see at once), and somehow glue it all together. Enter the Model Context Protocol (MCP) – a new open standard that’s transforming how developers build AI apps. Think of MCP as the USB-C of AI applications, a universal connector that lets your AI agents easily plug into data sources and tools (Introduction - Model Context Protocol). In this post, we’ll break down what MCP is in builder-friendly terms, how it accelerates AI app development, and how the all-in-one Fine.dev platform uses MCP to help you build AI apps faster with less friction. By the end, you’ll see how to get started with Fine and use MCP as a core enabler of your next AI-powered project.

model-context-protocol-pic.png

What is the Model Context Protocol (MCP)?

MCP (Model Context Protocol) is an open standard that standardizes how applications provide context and connect external resources to AI models (Introducing the Model Context Protocol \ Anthropic). In other words, it’s a common protocol that lets AI systems securely talk to the outside world. Just as USB-C provides one standard plug for all your devices, MCP provides a single, consistent way for AI applications (like chatbots, coding assistants, or any AI agent) to interface with different data sources, tools, and services (Introduction - Model Context Protocol) (Model Context Protocol (MCP): 8 MCP Servers Every Developer Should Try! - DEV Community).

Builder translation: Instead of writing custom integration code every time you want an AI model to fetch some data or perform an action, you use MCP’s conventions. You either expose your data or service as an MCP server, or use (or build) an AI client that can connect to MCP servers. The protocol handles the messaging, so any MCP-compatible client can work with any MCP-compatible server without custom code (Understanding the Model Context Protocol (MCP) | deepset Blog). It’s like a contract: as long as both sides speak MCP, the AI can request info or actions and get results in a structured way (Understanding the Model Context Protocol (MCP) | deepset Blog).

How MCP Works (in a Nutshell)

At a high level, MCP follows a client–server architecture (Introducing the Model Context Protocol \ Anthropic):

-

MCP Servers: These are lightweight connectors or adapters that interface with a specific tool or data source (e.g. a database, a web API, Gmail, Slack, etc.). Each server exposes certain capabilities (like “search emails” or “query database”) via the standardized MCP interface.

-

MCP Clients: These live with the AI model (often embedded in the AI application or agent). The client connects to one or more MCP servers. When the AI needs something (data or an action), it sends a request via the client to the appropriate server.

-

Hosts: The environment where the AI and MCP client run (this could be a chat app, IDE, or your AI-powered app itself). The host initiates the connection and orchestrates the overall session (Core architecture - Model Context Protocol).

When a user gives a prompt or task, the MCP client analyzes the request and figures out which tool or context it needs. It then sends a message to the relevant MCP server, which does the heavy lifting (e.g. fetching the latest stock price from an API or sending an email via Gmail’s API). The server returns the result, and the AI can incorporate it into its response. All of this happens through a consistent message format defined by MCP, rather than ad-hoc prompt engineering. In fact, MCP defines clear patterns for providing context to models, managing tool use, and handling responses – much like how REST standardized web service communication (Understanding the Model Context Protocol (MCP) | deepset Blog). This means developers don’t have to reinvent the wheel for each new data source or worry about the low-level prompt structure; MCP has a blueprint for it.

Why MCP Matters for AI App Developers

MCP is a game-changer because it bridges the gap between isolated AI models and the rich world of data and services they often need to be truly useful (Introducing the Model Context Protocol \ Anthropic). Here are some of the big benefits of MCP:

-

Standardized Integrations: No more writing one-off code for every API or database your AI needs to use. MCP provides a universal adapter layer. Once a data source has an MCP server, any AI agent can plug into it and retrieve info or trigger actions in a consistent way (Model Context Protocol (MCP): 8 MCP Servers Every Developer Should Try! - DEV Community). This fosters an ecosystem of reusable connectors – build a connector once and any future LLM or AI app can reuse it. It’s plug-and-play AI development.

-

Enhanced Context & “App Memory”: Because MCP lets the AI fetch data on demand, you aren’t limited by the model’s built-in context window. Your AI app can maintain long-term context or memory by storing info externally and pulling it in when needed. For example, an MCP server could interface with a database or knowledge base containing chat history, user preferences, or any extended context. The AI can query that via MCP, effectively extending its memory beyond the few-thousand-token limit. Even better, with new remote MCP servers, the server can retain user-specific context between sessions, providing a persistent experience like an app memory ( Cloudflare Accelerates AI Agent Development With The Industry's First Remote MCP Server | Cloudflare ). (No more repeating the entire conversation history to the model every time – the context can be stored once and referenced as needed.)

-

Action-Oriented AI (beyond just text answers): Traditionally, LLMs could only answer questions or give advice. With MCP, they can take actions on the user’s behalf in a controlled manner. In the example above, the AI actually sent an email after looking up data. MCP opens the door to AI agents that can, for instance, book calendar events, execute code deployments, or interact with enterprise systems directly ( Cloudflare Accelerates AI Agent Development With The Industry's First Remote MCP Server | Cloudflare ) – all through standard connectors. This can dramatically streamline workflows (imagine an AI agent that not only tells you “Looks like you’re low on inventory” but also automatically creates a purchase order through an MCP-connected ERP system).

-

Faster Development Cycles: By removing the need for bespoke integrations, MCP lets developers prototype and iterate faster. Want to add a new feature to your AI app that uses a third-party service? If an MCP server exists for it, you can connect it in minutes without diving into API docs and writing boilerplate. Even if it doesn’t exist yet, MCP’s standard makes it straightforward to implement one. This means you spend more time on your app’s logic and less on plumbing. As one analyst put it, MCP is proving “incredibly valuable for building AI applications faster” by becoming the new standard for communicating context in agent-based systems (Understanding the Model Context Protocol (MCP) | deepset Blog) (Understanding the Model Context Protocol (MCP) | deepset Blog).

-

Interoperability & Flexibility: MCP is model-agnostic and platform-agnostic. Your AI app can switch out the underlying LLM (be it OpenAI, Anthropic’s Claude, etc.) or move between environments, and as long as the new environment supports MCP, your integrations still work (Introduction - Model Context Protocol). This decoupling of tools from the AI model gives you flexibility to use the best model for the job without rebuilding integrations. It also means different AI applications can share a common pool of MCP servers. For developers, this hints at a future where AI services and tools compose together more easily – akin to how any browser can talk to any web server thanks to HTTP.

-

Built-in Best Practices (Security & Auth): Integrating an AI with sensitive data or powerful tools raises security questions – you don’t want an AI agent emailing your entire contact list or pulling confidential data unless authorized. MCP was designed with security in mind, leveraging battle-tested standards like OAuth 2.0 for authentication and permissioning (Build and deploy Remote Model Context Protocol (MCP) servers to Cloudflare). In an MCP setup, users explicitly grant an AI agent access to a resource via the MCP server’s auth flow, so there’s clear permission control. Fine-grained scopes ensure the AI can only do what it’s allowed to. This standardized auth means developers don’t have to roll their own unsafe solutions – it’s baked into the protocol. The result is improved security for AI integrations (Model Context Protocol (MCP): 8 MCP Servers Every Developer Should Try! - DEV Community), making it viable to connect enterprise systems to AI without opening undue risk.

In short, MCP makes AI development more seamless, scalable, and secure. It takes care of the “glue” – the prompt structuring, tool invocation, and context passing – so you can focus on building cool features, not wrestling with how to feed data into your model.

Accelerating AI App Development with Fine.dev and MCP

So how does this relate to Fine.dev, and what is Fine exactly? Fine is an all-in-one platform that lets anyone build, deploy, and run AI-powered web applications with minimal effort. It’s often described as having an “AI junior developer” on your team. You simply tell Fine (in plain English) what you want your app to do, and Fine’s AI agents handle building the frontend, backend, database, and deployment for you (Fine: Anyone can build.) (Fine: Anyone can build.). In other words, Fine is an AI app builder – from UI to server logic, it can generate the code and set up the infrastructure automatically.

Crucially, Fine.dev bakes in all the pieces an AI app needs out of the box. As Fine’s founder describes, “every project our users build comes with auth, database, file storage, LLM integration, and hosting, all working out of the box.” (We built our entire AI App Builder on Cloudflare stack, and it's awesome | Hacker News) That’s a huge boost for developer productivity – no wrestling with 10 different services or cloud accounts just to get a basic app running. Everything is pre-integrated and ready to go:

-

User Authentication: Built-in login/auth system so your users can sign up and sign in without extra services (Fine: Anyone can build.).

-

Database (Postgres): An instantly available database (powered by Cloudflare D1) for your app’s data (We built our entire AI App Builder on Cloudflare stack, and it's awesome | Hacker News).

-

File Storage: Built-in object storage (via Cloudflare R2) for any media or file needs (We built our entire AI App Builder on Cloudflare stack, and it's awesome | Hacker News).

-

Backend Functions: Serverless backend logic using Cloudflare Workers – you can extend your app without managing servers (Fine: Anyone can build.).

-

LLM Integration: Easy integration of large language models like OpenAI GPT-4 or Anthropic Claude into your app’s features (Fine: Anyone can build.).

-

One-Click Deployment: Automatic deployment to a global edge network (Cloudflare’s network) so your app is live and fast everywhere by default (Fine: Anyone can build.).

Fine’s philosophy is to eliminate the friction in going from an idea to a live product. As the founder put it, the goal is that “anyone, literally anyone, will be able to build and launch something useful… without wrestling with infrastructure… without spending weeks before seeing something live.” (We built our entire AI App Builder on Cloudflare stack, and it's awesome | Hacker News) Fine achieves this “magic” by leveraging a Cloudflare-based stack where the infrastructure just melts away behind the scenes (We built our entire AI App Builder on Cloudflare stack, and it's awesome | Hacker News). Fine uses Cloudflare Workers for compute, D1 for the database, and so on (We built our entire AI App Builder on Cloudflare stack, and it's awesome | Hacker News) – meaning your app runs on a globally distributed platform, scalable and secure by default.

Now, where does MCP come in? Fine.dev embraces MCP as a core part of simplifying AI app development. Fine’s platform is essentially built to harness AI agents with context. When you tell Fine what app to build, an LLM agent generates code and configures your app. That agent needs context: it needs to understand your request, the code it’s writing, the data your app will handle, etc. MCP’s principles are at play here – Fine’s AI agent can pull in context about common app functionalities (auth, database schema) and use standardized “knowledge” to assemble your app quickly. In fact, Fine even has a proprietary knowledge graph (“Atlas”) that gives the AI agent awareness of your codebase and dependencies (Fine - AI-Powered Development | Fine), akin to providing it an MCP-like interface to your project’s context.

Moreover, once your app is built on Fine, you can incorporate AI features into that app just as easily. Suppose you build an AI-powered SaaS app that needs to, say, analyze user data from the database and send summary reports via email. Because Fine already has MCP-compatible connectors behind the scenes (database, email service, etc.), your app’s AI components can use them without you writing glue code. Fine is built on the same Cloudflare platform that introduced the first remote MCP server, so your app’s AI agents can securely connect to tools over the internet – no local setup needed ( Cloudflare Accelerates AI Agent Development With The Industry's First Remote MCP Server | Cloudflare ). This means the AI features in your app can have persistent state and memory (using Fine’s database or Cloudflare’s Durable Objects) and can perform multi-step actions (using Cloudflare Workflows) as first-class capabilities. Fine essentially provides an MCP-enabled runtime for your AI app.

To make this concrete, imagine you’re using Fine to build a customer support chatbot for a product. You want the chatbot to answer questions, but also create support tickets in Jira and look up order info from a database. Without MCP, you’d have to manually code the integrations with Jira’s API and your database, and carefully craft prompts to include that data. With Fine and MCP, much of this is handled for you. Fine would scaffold the app with a database and possibly an MCP server interface to it. The chatbot (an AI agent in the app) can be given access to a “Jira MCP server” and your app’s database through MCP. So when a user asks “What’s the status of my order #1234?”, the AI client in your app queries the database via MCP, and when they say “please escalate this issue,” the AI can create a Jira ticket via another MCP call. All of it happens behind a consistent API, accelerating your development and reducing friction. You focus on defining the high-level workflow, and Fine’s AI + MCP handles the rest.

Fine.dev’s real-world users are already reaping the benefits of this approach. Early adopters have used Fine to ship everything from AI agents and micro-SaaS apps to internal business tools in a fraction of the time it would normally take (We built our entire AI App Builder on Cloudflare stack, and it's awesome | Hacker News). And despite the diverse use cases, they report that working with all the integrated pieces is “smooth as butter” – the infrastructure complexity just disappears (We built our entire AI App Builder on Cloudflare stack, and it's awesome | Hacker News). This is the power of having an all-in-one platform that leverages standards like MCP: you get to build faster and with less friction. Fine’s stack handles the heavy lifting (scaling, auth, integrations), so you can iterate on your idea quickly. In fact, Fine launched its platform by turning a single prompt into a production-ready app (We built our entire AI App Builder on Cloudflare stack, and it's awesome | Hacker News), showcasing how an AI (with proper context and integration) can generate a fully working application almost instantly. That’s MCP in action – providing the AI with the building blocks it needs, so it can do in minutes what used to take developers weeks.

Getting Started with Fine (and MCP) – Build Your AI App Now

One of the best parts about Fine.dev is how easy it is to get started, even if you’re not an AI expert. You don’t have to worry about setting up any of the MCP servers or infrastructure yourself – Fine has done the hard work for you. Here’s how you can start building with Fine and leverage MCP as your secret sauce:

-

Sign Up for Fine.dev: Head over to Fine’s website and create a free account. Fine offers a free tier to experiment with your first app (Fine: Anyone can build.), so you can try it out with zero cost. No complex installation or environment setup – everything runs in the cloud.

-

Describe Your App Idea: Once you’re in, you’ll use Fine’s AI App Builder. This is where the magic happens. You simply describe in natural language what you want your app to do. For example, you might say: “I want to build a personal finance app that tracks expenses, uses an AI chatbot to give budgeting tips, and allows logging in with Google.” Don’t worry about phrasing it perfectly – just explain the features or user stories. Fine’s AI agent will understand your intent.

-

Watch Fine’s AI Build it: When you submit your description, Fine’s AI coding agent (powered by LLMs) goes to work. Thanks to its training and context (and behind-the-scenes protocols like MCP), it knows how to translate your request into a full-stack application. It will generate the frontend UI, setup the backend routes/functions, configure the database models, and even integrate any AI or external services you mentioned. You can literally see the app being scaffolded in minutes, without writing a single line of code. (Yes, it feels like sci-fi.) Fine’s AI can even answer questions about the code or make tweaks if you ask, just like a human collaborator.

-

Iterate and Refine: You can test the app right in the browser. Fine provides a development preview URL where your app is running. Try logging in, adding some data, or asking the AI chatbot a question (in our example app). If something isn’t quite right, you can go back and Ask Fine’s AI to adjust it – e.g., “Make the budget tips more formal,” or “Add a chart for spending over time.” The AI will modify the code accordingly. This tight feedback loop is where MCP’s context power shines: the AI always has the context of your app’s state and can seamlessly incorporate new requirements.

-

Deploy with One Click: Happy with the result? Deploy it! Fine handles the entire deployment process – packaging your app, deploying it to its global edge infrastructure, setting up domains, etc. (Fine: Anyone can build.). You just hit Deploy, and your app goes live to the world. Because everything is on Fine’s Cloudflare-powered stack, your app will automatically scale and stay performant. Fine even gives you a custom domain or you can bring your own. Congratulations, you built an AI-powered app in a weekend (or less)!

Throughout this process, MCP is quietly at work ensuring your AI functionalities integrate smoothly. For example, when your personal finance app’s chatbot needs to pull in the user’s latest transactions from the database, Fine’s MCP integration makes that as easy as a function call – the AI doesn’t hallucinate the data, it actually retrieves it via the MCP server that Fine set up for the database. When the app’s AI needs to advise the user, it can use relevant context (perhaps a budgeting formula or user’s past spending behavior) fetched through MCP. As a builder, you didn’t need to code any of that plumbing; you described the outcome, and Fine’s platform (with MCP as a core enabler) made it happen.

Conclusion

The Model Context Protocol is accelerating the evolution of AI apps from siloed chatbots to truly interactive, context-aware agents. It simplifies the hardest parts of AI app development – integrating data, maintaining memory, and performing actions – by offering a standard way to do it all. Fine.dev takes this a step further by packaging MCP’s capabilities into a builder-friendly platform. With Fine, you can go from idea to a live AI-powered application incredibly fast, without getting bogged down by integration code or infrastructure setup. It’s a conversational, intuitive way to build software: you tell Fine what you need, and it leverages AI (and protocols like MCP) to turn that into reality.